DisPerSion.eLabOrate

- Human/Machine Co-Creation in Collective Performance Contexts: From Instruments to Agents

- Deeply Listening Machines

- post-digital-instruments

- Gesture, Intentionality and Temporality in Machine-Mediated Performance

- Electro/Acoustic Comprovisation: Instruments, Identity, Language, Score

- Distributed Performance: Networked Practices and Topologies of Attention

- Distributed Composition: Networked Music and Intersubjective Resonance

- Distributed Listening: Expanded Presence in Telematic Space(s)

- Expanded Listening and Sonic/Haptic Immersion

dispersion.eLabOrate is an interactive sound environment defined by a ceiling array of microphones, audio input analysis, and synthesis that is directly driven by that analysis. The project was first developed during Van Nort’s 2019 research-creation graduate course Vertical Studio/Lab, which posed the challenge: “Can we augment – but not detract from – Deep Listening practice(s) using immersive and interactive media?” As one very successful outcome of this 12-week workshopping and development process, eLabOrate was further refined and explored through studies involving more participants.

The primary goal of the project is to augment an environment structured by performance of Pauline Oliveros’ text score The Tuning Meditation, and to gauge the perceptions that emerge from participants who perform in collaboration with the system.

Extending what it hears, the system generates an environment in which its “vocalizes” its own output a product of what it hears – including ambient sound, its own feedback, and participant input.

The Tuning Meditation score asks participants to alternate making vocal tones and then matching tones that another player is making. This alternation happens on each exhalation, resulting in overlapping and flowing tones which may move through synchronous and harmonious cycles, or dissonant and beating waves. This cycle of new tones and matching continues until a natural end is reached.

System Design

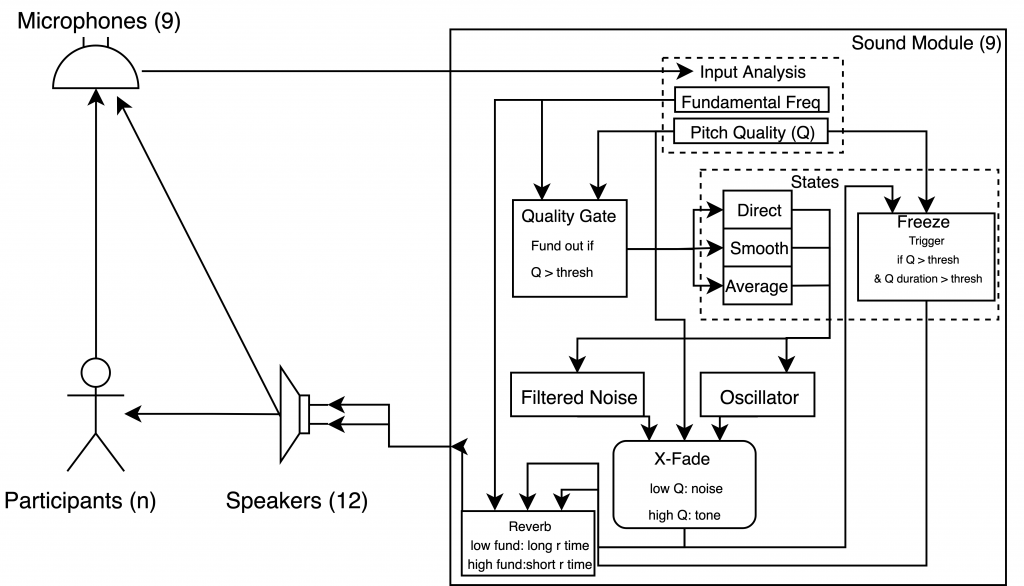

The system uses 12 floor mounted speakers for output, equally spaced throughout the performance venue, with a suspended 3×3 omnidirectional microphone array in the ceiling grid.

Dispersion.eLabOrate was created in Max, consisting of nine separate synthesis and analysis modules (one per ceiling microphone). The placement of microphones in relation to speakers promoted controlled feedback from the system’s output back to the input. The sensitivities of each input were tuned to allow for clear detection of participants in each area of the space, but ensured ringing and intense feedback was not possible, only coaxing out delay-like tails from machine output driven by human activity.

Audio input is analyzed for fundamental frequency and pitch quality (an estimation of analysis confidence). Low pitch quality results are tied to “noisy” input stimulus, while high quality results are closer to pure tone inputs. The analyzed fundamental is used to drive the frequency of the synth oscillator, as well as the centre frequency of a resonant band pass filter which moves between filtered noise and the generated tone. Values are only sent to the synth if a quality threshold is passed, avoiding unintentional ambient stimulus/noise being sent as input.

Each synthesis module can be set in one of four states to alter the behaviour of the resulting output. The four states are:

- Direct: fundamental is immediately reflected in synth oscillator and filtered noise

- Smooth: values are sent to the synth over a desired ramp time

- Average: calculates a running mean of fundamental over a given time window and passes result

- Freeze: triggers a spectral freeze on exceeding pitch quality threshold and quality duration

Player Responses

Player responses indicated a tendency to characterize the system as an equal collaborator during the piece, with one participant stating: “…the electronics held (the) same importance as the other performers”. For more detailed discussions that emerged from these sessions please see the below publications.

Publications

Hoy R., D. Van Nort, “Augmentation of Sonic Meditation Practices: Resonance, Feedback and Interaction through an Ecosystemic Approach” In: Kronland-Martinet R., Ystad S., Aramaki M. (eds) Perception, Representations, Image, Sound, Music. Lecture Notes in Computer Science, vol 12631., 2021. [Springer Link]

Hoy, R., D. Van Nort, “An Ecosystemic Approach to Augmenting Sonic Meditation Practices”, in Proc. of the 14th International Symposium on Computer Music Multidisciplinary Research (CMMR), 2019. [Research Gate]